Siva Power, the CIGS thin film company that re-emerged last year with a new team and approach to the market, is out with an ambitious cost roadmap for its modules.

Before getting into the details of Siva's plan, it's worth remembering one important number related to CIGS thin film: twenty.

That's the approximate number of companies attempting to scale the technology that have either gone bankrupt, gone silent, shifted strategy or have been acquired since 2009. Very few of the venture capitalists who shoveled billions of dollars into the technology ever saw their money again.

Brad Mattson, the CEO of Siva Power, said he's learned from the mistakes of companies that tried to scale too quickly or overestimated their ability to reduce costs. In 2011, when Siva Power was still called Solexant, Mattson took the helm at the company, ditched plans to build a 100-megawatt factory, and focused on R&D. After years of experimenting with different materials and production processes, Siva eventually settled on co-evaporated CIGS on large glass substrates.

"Historically, co-evaporation has yielded the highest overall efficiency of all the CIGS deposition processes" compared to roll-to-roll, sputtering and other techniques, said Shyam Mehta, GTM Research's lead upstream solar analyst.

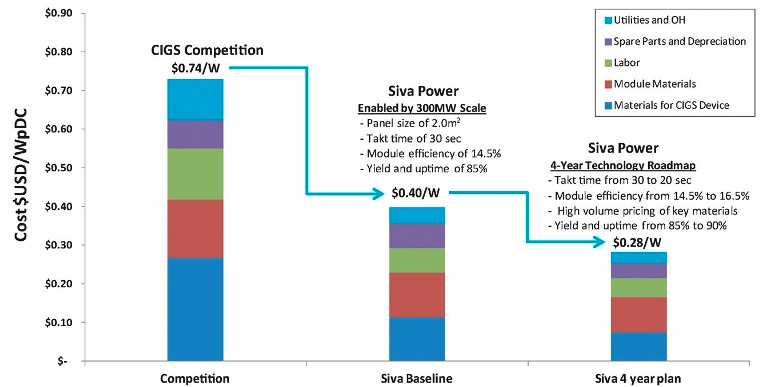

Mattson called the technology "a gift of physics" that offers the highest thin film efficiencies and the fastest production process. With $60 million in venture funding, Siva plans to build a 300-megawatt plant and eventually produce modules for 28 cents per watt over the next four years, assuming the facility is ever actually built.

Below is the company's newly released cost roadmap, which Mehta notes is "essentially a bunch of numbers in a spreadsheet." Siva doesn't even have its pilot line fully built yet.

Source: PRNewsFoto/Siva Power

The projections factor in labor, energy and water requirements, equipment needs, materials and overhead. Siva says its first 300-megawatt production line will produce CIGS modules at 40 cents per watt. After another two years of operation, the company believes it can get all-in costs down to 28 cents per watt.

The Department of Energy has set a production cost target of 50 cents per watt by 2030. In March, Jinko solar reported that it was producing crystalline silicon modules for 48 cents per watt. According to GTM Research's analysis, top Chinese producers could be making solar modules for 36 cents per watt by 2017.

Mattson told GTM's Eric Wesoff that he believes those numbers are "completely unsustainable" because they don't factor in large subsidies from the Chinese government.

So is Siva's plan "sustainable," considering that the company hasn't even built out its first pilot production facility? Mattson continues to talk about the "gigawatt era" in solar. But only one CIGS company, Solar Frontier, has gotten there so far, and Siva isn't anywhere close.

"It is not outside the realm of possibility, assuming they do hit their goals for scale, efficiency and manufacturing yield, which are undoubtedly ambitious," said Mehta.

The most obvious issue is timing. Due to technical problems related to deposition, CIGS thin film producers have historically underestimated their time to scale. "History has shown that almost all thin film startups have taken significantly longer to scale up" than projected, said Mehta.

The biggest technical challenge for Siva will be ensuring uniform deposition on the large glass substrates while keeping production levels high. (Applied Materials also used large glass substrates for amorphous silicon thin film. That ended poorly, mostly due to efficiency issues.)

"This is where it's fair to be skeptical, even though Siva's team is world-class," said Mehta.

For now, Siva's cost structure will sit in a spreadsheet untested until the firm builds out its first production line.

Greentech Media (GTM) produces industry-leading news, research, and conferences in the business-to-business greentech market. Our coverage areas include solar, smart grid, energy efficiency, wind, and other non-incumbent energy markets. For more information, visit: greentechmedia.com , follow us on twitter: @greentechmedia, or like us on Facebook: facebook.com/greentechmedia.

Greentech Media (GTM) produces industry-leading news, research, and conferences in the business-to-business greentech market. Our coverage areas include solar, smart grid, energy efficiency, wind, and other non-incumbent energy markets. For more information, visit: greentechmedia.com , follow us on twitter: @greentechmedia, or like us on Facebook: facebook.com/greentechmedia.

Authored by:

Stephen Lacey

Stephen Lacey is a Senior Editor at Greentech Media, where he focuses primarily on energy efficiency. He has extensive experience reporting on the business and politics of cleantech. He was formerly Deputy Editor of Climate Progress, a climate and energy blog based at the Center for American Progress. He was also an editor/producer with Renewable Energy World. He received his B.A. in ...

Â

Â

Â

Â